Why Resume Shortlisting Is the Biggest Bottleneck in Startup Hiring

Discover why manual resume shortlisting slows startup hiring and how AI resume screening software fixes the biggest hiring bottleneck.

Table of Contents

Introduction

If you've ever been part of a small, fast-growing team, you know the feeling. A crucial role opens up, you post the job, and the applications start pouring in. First, it's a trickle, then a steady stream, and soon you're drowning in a sea of PDFs. Each one represents a potential teammate, a person who could help propel the company forward. Yet, the sheer volume transforms a moment of opportunity into a logistical nightmare. This is the resume shortlisting bottleneck, and for startups operating with razor-thin margins for error and time, it’s more than an inconvenience—it’s a critical business risk. The chatter on professional networks like LinkedIn is unequivocal: founders and hiring managers are spending an unsustainable amount of time merely sorting candidates, often with inconsistent and biased results. The manual process is breaking down. However, emerging research from the academic frontier offers a glimpse into a more efficient and equitable future, powered by sophisticated AI frameworks. In this article, we'll dissect why traditional shortlisting fails startups, explore the promise of modern multi-agent LLM systems for screening, address the critical challenge of bias head-on, and outline a practical path forward for teams ready to optimise their hiring funnel.

The Anatomy of a Bottleneck: Why Manual Shortlisting Fails Startups

In startup terms, a "bottleneck" is any constraint that severely limits system throughput. Resume shortlisting fits this definition perfectly. It’s the narrowest point in the hiring funnel, where a high volume of input (applications) must be drastically reduced by a limited processing capacity (human reviewers). The reasons for its failure are multifaceted. First, the process is prohibitively time-consuming. A hiring manager at an early-stage startup isn't just a hiring manager; they are also likely leading projects, managing stakeholders, and putting out daily fires. Dedicating hours to meticulously reviewing hundreds of resumes is a luxury they simply do not have. This time pressure often leads to hasty decisions or, worse, qualified candidates being overlooked because a reviewer only skimmed the first few applications in detail. Second, and more insidiously, manual evaluation is inherently subjective and prone to bias. Without a standardised framework, evaluations vary wildly. One reviewer might prioritise pedigree from top-tier universities, while another values demonstrable project experience. This lack of standardisation makes it nearly impossible to compare candidates fairly. Furthermore, as highlighted in the 2024 study "Gender, Race, and Intersectional Bias in Resume Screening via Language Model Retrieval," human reviewers are vulnerable to unconscious biases that can disadvantage candidates based on gender, race, or other demographic factors encoded subtly in a resume [1]. For a startup aiming to build a diverse and innovative team, this is a catastrophic flaw. Finally, traditional keyword-matching is a blunt instrument. It overlooks candidates with non-traditional backgrounds or those who express their skills differently. A candidate might have built a complex system but describe it in simple terms, causing them to be filtered out by an algorithm—or a human eye—trained to spot specific jargon. This "lack of context" problem means startups risk missing out on exceptional talent that doesn't fit a preconceived mould.

A New Hope: The Rise of Multi-Agent LLM Frameworks

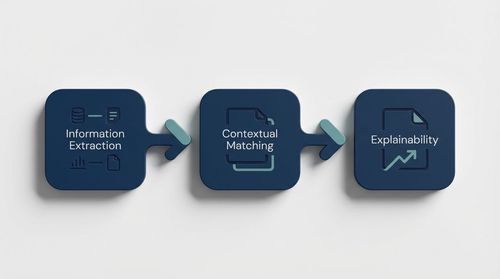

This is where artificial intelligence, particularly Large Language Models (LLMs), moves from hype to practical utility. The latest academic proposals are not about simple keyword scanners but complex, context-aware systems. A landmark 2025 paper, "AI Hiring with LLMs: A Context-Aware and Explainable Multi-Agent Framework for Resume Screening," exemplifies this shift [2]. Instead of a single model making a yes/no decision, it proposes a framework where multiple specialised AI "agents" collaborate. Imagine a system where:

- An Information Extraction Agent parses the resume, but goes beyond bullet points to understand project scopes, responsibilities, and achievements.

- A Contextual Matching Agent compares the extracted information against the job description, evaluating not just keyword presence but semantic relevance and depth of experience.

- An Explainability Agent operates simultaneously, generating a clear, concise report on why a candidate was shortlisted, citing specific resume excerpts and matching criteria. This multi-agent approach directly attacks the weaknesses of manual screening. It operates at a speed and scale impossible for humans, processes information consistently without fatigue, and begins to address the context problem by understanding semantic meaning. The inclusion of an explainability component is crucial for building trust, allowing human managers to understand the AI's reasoning and override it when necessary.

The Double-Edged Sword: Confronting Bias in AI-Powered Screening

Adopting AI is not a magic bullet. The model is only as unbiased as the data it's trained on. If historical hiring data reflects human biases, an AI trained on that data will not only perpetuate but potentially amplify those biases. This is a well-known risk in machine learning. The aforementioned 2024 study on bias in resume screening via language models investigates this very issue [1]. The researchers found that off-the-shelf language models can indeed exhibit and even retrieve information in ways that reflect gender and racial stereotypes. However, the study also points the way toward mitigation. Techniques like algorithmic fairness constraints, bias-aware training, and adversarial de-biasing can be integrated into the screening framework to actively counter these tendencies. This underscores a critical point for practitioners: the choice of tool matters. A startup must ask potential vendors not just about accuracy and speed, but about their specific methodologies for ensuring fairness. The goal is not to remove human judgment but to augment it with a tool that is more consistent and can be systematically audited and improved for fairness—a much harder task with human reviewers alone.

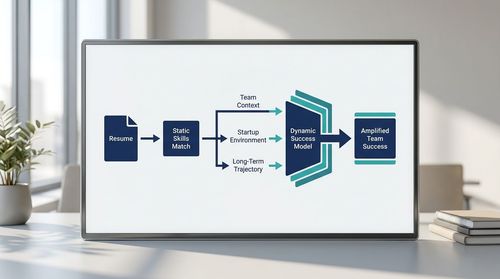

Beyond Resumes: Connecting Screening to Long-Term Success

An intriguing aspect of this evolution is how resume screening could be integrated into broader predictive frameworks. Another 2024 paper, "SSFF: Investigating LLM Predictive Capabilities for Startup Success through a Multi-Agent Framework," explores using LLMs to predict startup success by analysing a wide array of data [3]. While not directly about hiring, it hints at a future where a candidate's potential for contribution is assessed not just against a static job description, but against a dynamic model of what makes a team successful within a specific startup's context. Could a candidate's experiences and skills, as articulated in their resume and other artifacts, predict their ability to thrive in a high-uncertainty environment? This moves the goalpost from "can they do the job?" to "will they amplify our team's trajectory?" For a startup, this second question is far more valuable.

A Practical Roadmap for Startups

For a startup ready to tackle the shortlisting bottleneck, here is a grounded approach:

- Acknowledge the Bottleneck: The first step is recognising that manual shortlisting is a inefficient use of your most valuable resource: your team's time and focus.

- Define Clear, Bias-Conscious Criteria Before Screening: Work as a team to define what success looks like for the role. Use a structured scoring system based on skills, project experience, and cultural add (not just "fit") to create baseline standardisation. This also provides a benchmark against which to evaluate AI tools.

- Evaluate AI Tools as Collaborative Partners: When assessing an AI screening tool, prioritise explainability and fairness. Can it tell you why a candidate was selected? What measures does the provider have in place to mitigate bias? Treat it as a first-pass filter, not a final decision-maker.

- Maintain Human-in-the-Loop: The final shortlisting decision should always involve human judgment. The AI's role is to handle the volume and surface the most promising candidates, freeing you to engage in deeper evaluation during interviews.

- Iterate and Refine: Treat your hiring process like a product. Collect feedback on the quality of candidates shortlisted by the AI versus manual methods and continuously tweak your criteria and tool configuration.

Conclusion

The resume shortlisting bottleneck is a formidable challenge, but it is no longer an insurmountable one. The convergence of proven operational fixes—like structured criteria—with advanced, context-aware AI frameworks offers a viable path forward.

- Manual shortlisting is a scalability trap that consumes precious time and introduces unacceptable levels of subjectivity and bias.

- Modern multi-agent LLM systems provide a blueprint for automation that is not only efficient but also context-aware and, crucially, explainable.

- Bias mitigation must be a first-class requirement, not an afterthought, when implementing any automated screening system.

- The ultimate goal is a synergistic human-AI workflow, where technology handles the heavy lifting of filtration, allowing human experts to focus on the nuanced task of selection. For a startup, every hiring decision is a bet on the future. By evolving the resume shortlisting process from a dreaded chore into a strategic, data-informed function, founders and hiring managers can place those bets with greater confidence, clarity, and speed.

References

- [1] "Gender, Race, and Intersectional Bias in Resume Screening via Language Model Retrieval" (2024).

- [2] "AI Hiring with LLMs: A Context-Aware and Explainable Multi-Agent Framework for Resume Screening" (2025).

- [3] "SSFF: Investigating LLM Predictive Capabilities for Startup Success through a Multi-Agent Framework with Enhanced Explainability and Performance" (2024).