Can AI Really Reduce Hiring Bias? Here’s What the Data Shows

Table of Contents

Introduction

In the bustling tech hubs from Bangalore to San Francisco, a quiet revolution is underway in the hiring process. Recruitment teams, overwhelmed by hundreds of applications per role, are increasingly turning to Artificial Intelligence (AI) for relief. The promise is tantalising: algorithms that can screen resumes without human prejudice, leading to a more diverse and qualified workforce. Yet, on social media and in industry forums, a palpable scepticism exists. Critics warn that these systems can perpetuate the very inequalities they aim to eradicate. Is AI a cure for hiring bias, or is it just a new vector for it? This question is more than academic; it's a pressing operational challenge for startups focused on speed and for hiring managers accountable for building effective teams. The data from recent research provides a nuanced answer: AI can reduce hiring bias, but it is not a silver bullet. Its effectiveness is not inherent; it is a direct result of deliberate design choices concerning data, algorithms, and human oversight. In this article, we'll dissect the evidence, explore the technical architectures proposed to mitigate bias, and outline a practical roadmap for implementing AI-assisted hiring that is both efficient and equitable.

The Technical Blueprint for Mitigating Bias

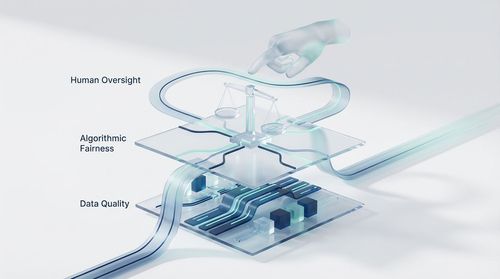

So, how do we build systems that leverage AI's strengths while mitigating its weaknesses? Recent scholarly work provides a technical blueprint centred on three pillars: data, algorithm design, and human-AI collaboration.

1. The Foundation: Why Training Data is Everything

The principle is simple but critical: the quality and diversity of training data significantly impact the fairness and accuracy of AI models. A model is only as unbiased as the data it learns from. This means that for an AI hiring tool to be effective, it must be trained on a dataset that is not only large but also representative of a diverse range of candidates across gender, race, educational background, and career paths. The 2024 study on "Gender, Race, and Intersectional Bias in Resume Screening" underscores this point, showing how language models can perpetuate societal biases present in their training corpora [1]. The mitigation strategy is clear: organisations must invest in curating or sourcing diverse and representative datasets. This might involve synthesising data or using techniques like data augmentation to balance underrepresented profiles. For a startup, this means prioritising data quality from day one—a "garbage in, gospel out" scenario is a real and dangerous possibility.

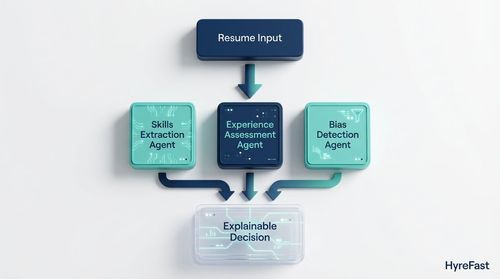

2. The Engine: Algorithmic Design for Fairness

Beyond the data, the design of AI algorithms and models can either perpetuate or mitigate bias. Modern research is moving beyond simple keyword-matching systems towards more sophisticated, context-aware frameworks. The 2025 paper "AI Hiring with LLMs: A Context-Aware and Explainable Multi-Agent Framework for Resume Screening" proposes a compelling architecture [2]. Instead of a single, monolithic model making a final decision, this framework employs multiple specialised "agents." One agent might extract key skills, another assess experience relevance, and a third check for potential bias in the evaluation. This multi-agent approach allows for a more nuanced analysis. Crucially, the framework incorporates explainability—it can provide a clear rationale for why a candidate was shortlisted or rejected. This transparency is vital for human reviewers to trust the system and to identify when the algorithm might be making an unfair inference. For a hiring manager, this translates to an audit trail, turning a cryptic decision into a debatable recommendation.

3. The Control System: The Essential Role of Human Oversight

Perhaps the most critical finding across studies is that the way humans interact with AI systems influences their performance and fairness. AI should be positioned as an assistant, not an autocrat. The most effective systems are those built for human-in-the-loop interaction. The "SSFF" framework for startup success prediction, while focused on a different domain, emphasises enhanced explainability and performance through a multi-agent system, a principle that applies directly to hiring [3]. In practice, this means the AI handles the heavy lifting of sorting and scoring candidates based on objective criteria, but the final decision rests with a human who can consider contextual factors the AI might miss—like career gaps or unique project experiences. This collaboration leverages the scalability of AI with the nuanced judgement of humans. Regular audits where humans review the AI's recommendations, especially its rejections, are essential to create a feedback loop that continuously improves the system's fairness.

A Practical Roadmap for Organisations

For a founder or hiring manager looking to implement these findings, the path forward involves a proactive, multi-faceted strategy. It's about building a system, not just buying a tool.

- Curate Your Data Foundation: Before selecting a tool, audit the data it was trained on. Ask vendors about the diversity and sourcing of their training datasets. If you're building in-house, make data representativeness a non-negotiable KPI.

- Prioritise Explainability: Choose tools that provide clear, interpretable reasons for their decisions. Avoid "black box" solutions. The ability to ask "why did you rank this candidate highly?" is a fundamental feature for mitigating bias.

- Implement a Human-in-the-Loop Workflow: Design your hiring process so that AI recommendations are a starting point for human evaluation, not the finish line. Mandate that a human reviewer examines a random sample of AI-rejected applications to check for false negatives.

- Commit to Continuous Auditing: Bias mitigation is not a one-time setup. Regularly run bias audits on your hiring outcomes. Are certain groups being disproportionately rejected at the screening stage? Use these metrics to refine your process and retrain your models.

Conclusions

The data presents a clear, if complex, picture. The question is not whether AI can reduce hiring bias, but how we can guide it to do so.

- AI's potential to reduce bias is real, but it is contingent on rigorous attention to data quality, algorithmic fairness, and human oversight.

- Techniques like multi-agent frameworks and explainable AI (XAI) are moving from academic research into practical tools, offering a path toward more transparent and equitable systems.

- Success hinges on viewing AI as an augmenting tool within a broader, bias-aware hiring culture—not as a standalone solution.

- The cost of getting this wrong is high, but the reward for getting it right—a more efficient process that builds stronger, more diverse teams—is a competitive advantage.

Future Directions

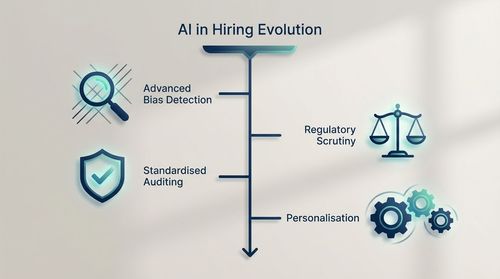

The field of AI-in-hiring is evolving rapidly. Based on the current research trajectory, we can anticipate several key developments:

- Advanced Bias Detection: More sophisticated agents dedicated solely to detecting nuanced and intersectional biases in real-time.

- Standardised Auditing Frameworks: Industry-wide standards and tools for routinely auditing AI hiring systems for fairness, similar to financial audits.

- Regulatory Scrutiny: Increased government regulation around the use of AI in employment decisions, necessitating even greater transparency.

- Personalisation and Adaptability: AI systems that can adapt their evaluation criteria based on the specific diversity and inclusion goals of an organisation. The journey toward unbiased hiring is ongoing. By grounding our approach in data and a clear-eyed understanding of both the power and perils of AI, we can harness this technology to create genuinely more inclusive and equitable workplaces.

References

- [1] "Gender, Race, and Intersectional Bias in Resume Screening via Language Model Retrieval" (2024).

- [2] "AI Hiring with LLMs: A Context-Aware and Explainable Multi-Agent Framework for Resume Screening" (2025).

- [3] "SSFF: Investigating LLM Predictive Capabilities for Startup Success through a Multi-Agent Framework with Enhanced Explainability and Performance" (2024).

- Proceedings of the National Academy of Sciences study on AI-powered resume screening tools.

- Harvard Business Review study on AI perpetuating biases in hiring.