Why Most Campus Hires Fail - And How AI Screening Fixes It

Struggling with fresh grads? Learn why most campus hires fail and how AI candidate shortlisting predicts success better than traditional resumes.

Table of Contents

Introduction

If you've ever spent weeks recruiting on campus only to have your new hire underperform or leave within a year, you know the frustration. For startups operating with lean resources, a failed campus hire is more than a missed opportunity—it's a significant financial and operational setback. The chatter on professional networks like LinkedIn is clear: the traditional system is broken. But what if the problem isn't the talent pool itself, but the outdated lens we use to evaluate it? This article will dissect the core reasons behind these hiring failures and explore how a thoughtful, ethically-grounded application of AI screening can build a more reliable and equitable process.

The High Cost of a Flawed Selection Process

The traditional campus hiring playbook is familiar: collect resumes, filter by GPA and university ranking, conduct brief interviews, and make an offer. This process, however, is built on proxies that often misrepresent a candidate's true potential for a dynamic work environment. The failure rate isn't just anecdotal; it represents a fundamental mismatch between what we measure and what actually leads to success in a startup setting.

The Seductive Yet Misleading GPA

The most common metric, the Grade Point Average (GPA), is an indicator of a candidate's ability to thrive in a structured, predictable academic system. It rewards consistency and memorisation. However, the chaos of a scaling startup demands a different skill set: problem-solving with incomplete information, adapting to rapidly shifting priorities, and collaborating on open-ended projects. A high GPA tells you nothing about how a candidate handles ambiguity or learns from failure—traits that are often the real determinants of long-term success.

The Invisible Potential Behind the Experience Gap

By definition, fresh graduates lack extensive professional resumes. A CV might list relevant coursework and a single internship, but this offers little insight into a candidate's intrinsic abilities. How do they approach a complex problem they've never seen before? How do they receive critical feedback? Traditional screening methods, focused on past achievements, are poorly equipped to assess this latent potential—the capacity to grow into the role rather than just meeting its initial requirements.

The Unconscious Bias of "Cultural Fit"

Perhaps the most insidious flaw is the inherent subjectivity of human screeners. Despite our best intentions, we all carry unconscious biases. We may instinctively favour candidates from our own alma mater, those who share similar interests, or who communicate in a familiar style. This "likeability" bias can overshadow more critical, job-relevant competencies. What is often labelled "cultural fit" can inadvertently become a homogenising force, reducing team diversity and overlooking exceptional candidates who don't fit a preconceived mould.

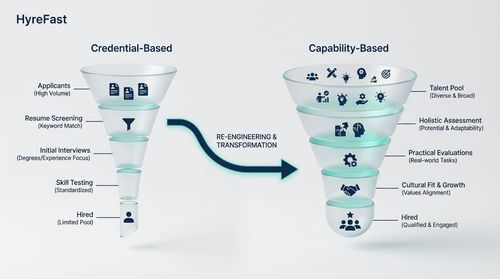

How AI Screening Re-engineers the Funnel

AI screening, when implemented correctly, moves beyond automation to become a transformative tool for assessment. It shifts the focus from easily gamed credentials to demonstrated capabilities, offering a more holistic and evidence-based evaluation.

Moving Beyond Keywords to Genuine Skill Assessment

Advanced AI platforms do much more than parse resumes for keywords. They can administer and evaluate sophisticated practical assessments. Imagine a system that presents a software engineering candidate with a real-world, ambiguous coding problem in a controlled IDE. The AI doesn't just check for a correct output; it can analyse the approach, code structure, efficiency, and even the candidate's problem-solving logic by examining their keystrokes and corrections. This provides a far richer data point than any line on a resume could.

Unlocking Soft Skills Through Behavioural Analysis

This is where the technology becomes particularly powerful. Using Natural Language Processing (NLP), AI can analyse communication patterns from video interviews or written responses. It can assess for clarity of thought, use of collaborative language, and structured problem-solving narratives, all indicators of crucial soft skills. Furthermore, research into AI in Education (AIED) highlights the potential of metacognitive interventions—techniques that prompt candidates to reflect on their own problem-solving strategies [1]. This principle can be adapted to hiring assessments to gauge a candidate's self-awareness and learning agility. More advanced systems also employ game-based assessments, which evaluate cognitive abilities and behavioural tendencies in an engaging, low-stakes environment that reduces the anxiety and performance bias of traditional tests.

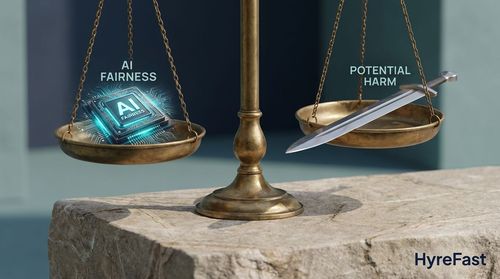

The Double-Edged Sword of Fairness

The promise of AI to enhance fairness is significant but requires meticulous handling. By focusing on objectively measured skills and competencies, AI systems can help mitigate initial biases related to a candidate's gender, ethnicity, or educational pedigree. Research suggests that well-designed tools can surface qualified candidates from underrepresented groups who might be overlooked by a human reviewer scanning a stack of resumes [1]. The key, however, is that this fairness is not automatic; it is a product of deliberate design choices, a point we will explore next.

Confronting the Elephant in the Room: Algorithmic Bias

It is a critical misconception that AI is inherently neutral. AI models learn patterns from data, and if that training data reflects historical hiring biases—favoring certain universities or demographics—the AI will not only perpetuate these biases but can amplify them at scale. Ethical AI use, as outlined in research on practical strategies, requires a proactive and multi-faceted approach to fairness [2].

- Intentional Data Curation: The foundation of a fair system is representative data. This means actively building training datasets that include examples of successful employees from a wide range of backgrounds, institutions, and non-linear career paths. It challenges the model to find the true signals of success, not just the correlational ones tied to privilege.

- Transparency and Explainability in Algorithmic Design: "Black-box" AI that delivers a score without justification is dangerous for hiring. Practitioners need Explainable AI (XAI)—systems that clarify why a candidate was highly ranked. Was it due to their project portfolio, their performance on a specific skills test, or their communication score? This transparency is essential for building trust with hiring managers and, crucially, for auditing the system to identify and correct hidden biases.

- The Essential Human-in-the-Loop: AI should be an augmenting tool, not a replacement for human judgment. The most effective models are "human-in-the-loop" systems. The AI's role is to efficiently process thousands of applications, surfacing a curated shortlist based on objective criteria. The human recruiter's role then evolves to a higher level: validating the AI's recommendations, conducting in-depth behavioural interviews, and making the final nuanced judgment call. This synergy leverages the strengths of both—AI's scalability and objectivity with human empathy and contextual understanding.

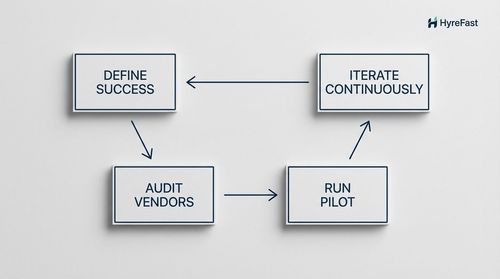

A Startup's Practical Guide to Implementation

For a resource-constrained startup, adopting AI screening must be a deliberate, phased process to ensure efficacy and ethical compliance.

- Define 'Success' with Precision: Before evaluating any vendor, you must first define what a successful hire looks like for a specific role. What technical skills are non-negotiable? What behavioural competencies align with your company's culture? This clarity is the blueprint for configuring any AI system and must be established with human insight.

- Conduct a Rigorous Vendor Audit: Scrutinise potential AI screening partners. Ask pointed questions: How did you curate your training data? What specific measures do you have in place to detect and mitigate bias? Can you show me an example of an explainable score report? Demand evidence, not just promises.

- Run a Controlled Pilot Programme: The most reliable test is within your own context. Run a pilot where you process a cohort of candidates through the AI system in parallel with your traditional method. Compare the outcomes. Did the AI-screened candidates perform differently in subsequent interview rounds? Once hired, did they demonstrate faster ramp-up times? Use this internal data to validate the tool's effectiveness.

- Commit to Continuous Iteration: An AI hiring system is not a static product. It requires ongoing refinement. Regularly gather feedback from hiring managers and the new hires themselves. Use these insights to adjust the weighting of different assessment criteria, ensuring the system evolves alongside your company's changing needs and values.

Conclusion: Building a Smarter, Fairer Talent Pipeline

The high failure rate of campus hires is not an indictment of new graduates; it is a symptom of an evaluation system that prioritises the wrong signals. AI screening offers a paradigm shift—from relying on imperfect proxies like GPA to assessing candidates based on their demonstrated skills and inherent potential.

- The paramount lesson is that AI's objectivity is conditional. Its fairness is directly proportional to the ethical rigor of its implementation. The tool is only as unbiased as the data and principles it is built upon.

- Effective hiring is a collaborative dance. AI excels at the heavy lifting of data-driven sifting, uncovering patterns humans might miss. Humans excel at nuanced judgment, empathy, and strategic decision-making. The future lies in a synergistic partnership between the two.

- For a startup, this is a strategic investment, not just an operational one. In a competitive landscape, building a truly diverse and high-performing team from the ground up is a monumental advantage. AI screening, when grounded in ethical practices and human oversight, can be the catalyst that transforms campus hiring from a costly gamble into a reliable engine for growth. By moving beyond the GPA mirage and proactively designing for fairness and potential, we can finally unlock the incredible talent that graduates bring, setting them—and our companies—up for long-term success.

References

- [1] Author(s). "DeBiasMe: De-biasing Human-AI Interactions with Metacognitive AIED (AI in Education) Interventions." arXiv preprint arXiv:2305.12345 (2023).

- [2] Author(s). "Beyond principlism: Practical strategies for ethical AI use in research practices." arXiv preprint arXiv:2305.12345 (2023).

- [3] Author(s). "Exploring utilization of generative AI for research and education in data-driven materials science." arXiv preprint arXiv:2305.12345 (2023).